Yuhang He(Henry)

Yuhang He(Henry) I am currently a Senior Researcher (opens new window) at Microsoft Research (MSR) in Vancouver, Canada. Before that, I graduated with DPhil (aka Ph.D.) in Computer Science Department (opens new window), University of Oxford (opens new window), where I was co-advised by Prof. Andrew Markham (opens new window) and Prof. Niki Trigoni (opens new window). I interned at Mitsubishi Electric Research Laboratories (MERL (opens new window)), MA, US from Jun. 2023 to Dec. 2023, Microsoft Applied Sciences Group in Munich, Germany (Microsoft (opens new window)) from May 2024 to Aug. 2024. I received B.Eng. from Wuhan University (opens new window), China.

My research interest broadly lies in multimodal 3D spatial intelligence, crossmodal content generation, signal processing inspired world model learning system design.

Feel free to drop me an email (yuhanghe[at]microsoft.com) if you want to contact.

Interests

- 3D Spatial Intelligence

- Multimodal Learning

- Crossmodal Generation

- World Model Modeling

Education

- Ph.D. in Computer ScienceUniversity of Oxford

- B.Eng. in Remote Sensing and PhotogrammetryWuhan University

# News

- One paper accepted by EMNLP 2025 (opens new window). 2025.08.

- One paper accepted by ICCV 2025 (opens new window). 2025.07.

- Serve as area chair for 3DV 2026 (opens new window), COLM 2025 (opens new window). 2025.05.

- One paper accepted by ICASSP 2025 (opens new window). 2024.12.

- One paper accepted by WACV 2025 (opens new window). 2024.10.

- Serve as AAAI 2025 (opens new window) program committee, ICASSP 2025 (opens new window) area chair. 2024.09.

- One paper accepted by NeurIPS 2024 (opens new window). 2024.09.

- One paper accepted by Nature Communications Engineering, paper link (opens new window).

- Started internship at Microsoft's Applied Sciences Group (ASG) in Munich, Germany. 2024.05.

- One paper accepted by ICML 2024 (opens new window). 2024.05.

- Won student volunteer scholarship for AAAI 2024 (opens new window). 2023.12.

- One paper accepted by AAAI 2024 (opens new window). 2023.12.

- Accepted by WACV 2024 Doctoral Consortium (opens new window). 2023.11.

- One paper accepted by WACV 2024 (opens new window). 2023.10.

- Start internship at Mitsubishi Electric Research Laboratories MERL (opens new window). 2023.06;

- One paper accepted by Robotics: Science and Systems RSS 2023 (opens new window). 2023.04;

- One paper accepted by AISTATS 2023 (opens new window). 2023.01;

- Completed confirmation viva by examiners Prof. Alex Rogers (opens new window) and Asso. Prof. Ronald Clark (opens new window). 2022.10;

- Received Interspeech 2022 (opens new window) travel grant. 2022.07;

- Paper accepted by Interspeech 2022 (opens new window). 2022.06;

- Worked as ICML21 student volunteer. 2021.06;

- Paper accepted by ICML2021 (opens new window). 2021.05;

- Completed transfer viva by examiners Prof. Tam Vu (opens new window) and Prof. Alex Rogers (opens new window). 2021.01;

# Publications

For full publication list, please refer to Google Scholar (opens new window) or → Full list

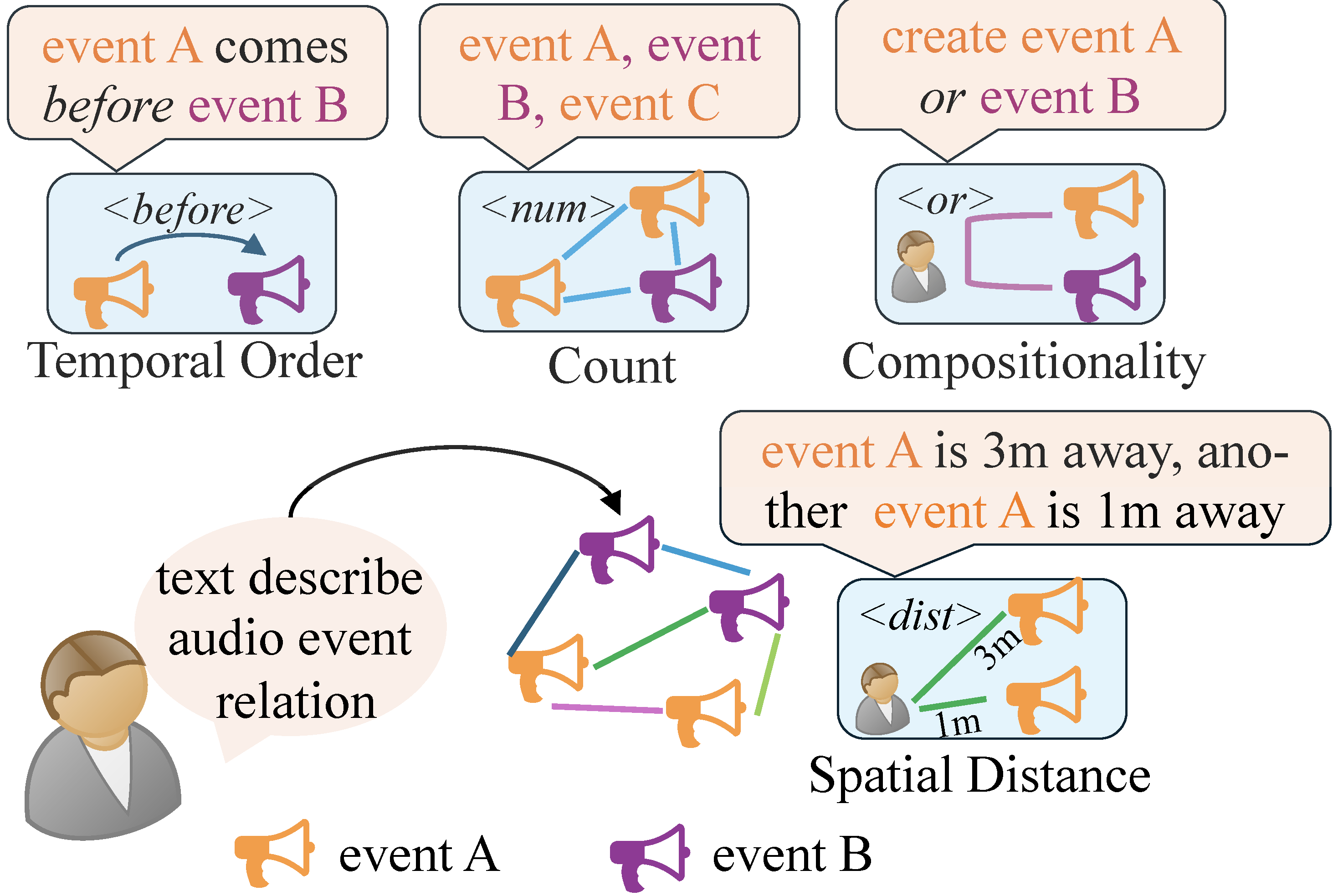

RiTTA: Modeling Event Relations in Text-to-Audio Generation

Yuhang He, Jain Yash, Xubo Liu, Andrew Markham, Vibhav Vineet.

Conference on Empirical Methods in Natural Language Processing (EMNLP Main), 2025.

We contribute audio events relation modeling in text-to-audio (TTA) generation task by proposing a new benchmark and a novel evaluation protocol.

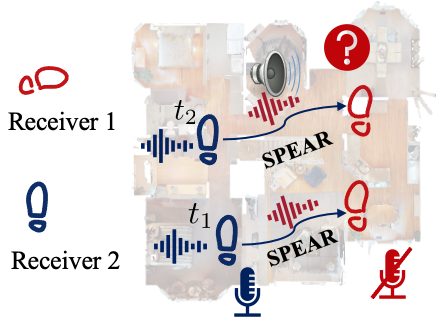

SPEAR: Receiver-to-Receiver Acoustic Neural Warping Field

Yuhang He, Shitong Xu, Jia-Xing Zhong, Sangyun Shin, Niki Trigoni, Andrew Markham.

We propose a receiver-to-receiver neural spatial acoustic effects prediction for an arbitrary target position from a reference position. It requires neither sound source position nor room acoustic properties.

Deep Neural Room Acoustics Primitive

Yuhang He, Anoop Cherian, Gordon Wichern, Andrew Markham.

The 41st International Conference on Machine Learning (ICML), 2024.

We introduce a novel framework to learn a continuous neural room acoustics field that implicitly encodes all essential sound propagation primitives for each enclosed 3D space.

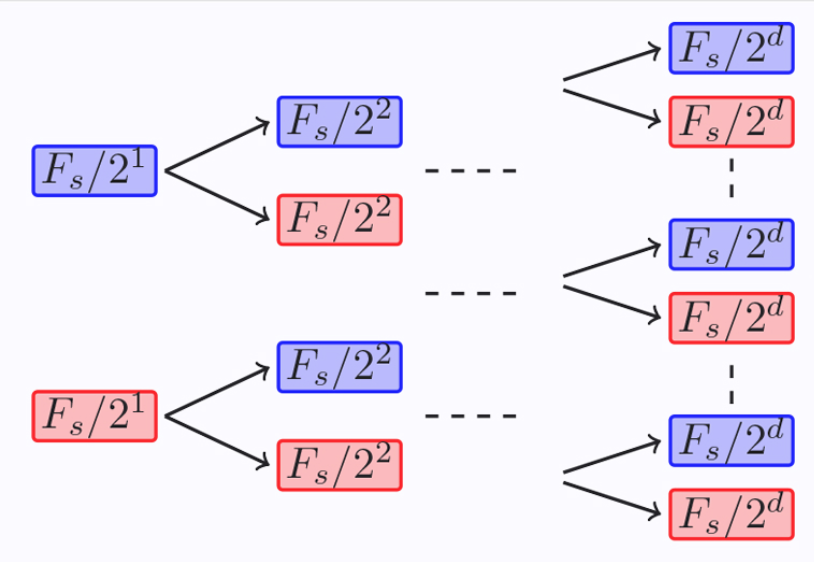

SoundCount: Sound Counting from Raw Audio with Dyadic Decomposition Neural Network

Yuhang He, Zhuangzhuang Dai, Long Chen, Niki Trigoni, Andrew Markham.

The 38th Annual AAAI Conference on Artificial Intelligence (AAAI), 2024.

We introduce a learnable dyadic decomposition framework that learns more representative time-frequency representation from highly polyphonic and loudness varying sound waveform. It dyadically decomposes the waveform in multi-stage hierarchical manner.

Sound3DVDet: 3D Sound Source Detection Using Multiview Microphone Array and RGB Images

Yuhang He, Sangyun Shin, Anoop Cherian, Niki Trigoni, Andrew Markham.

IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2024.

We introduce a novel 3D sound source localization and classification from multiview acoustic-camera recordings task. The sound source lies on object's physical surface but visually non-observable, which reflects some application cases like gas leaking.

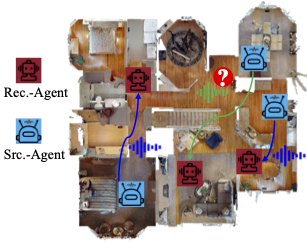

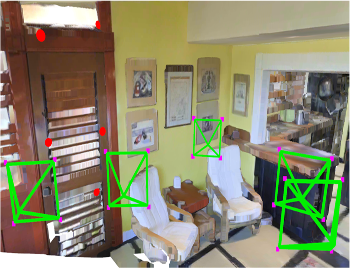

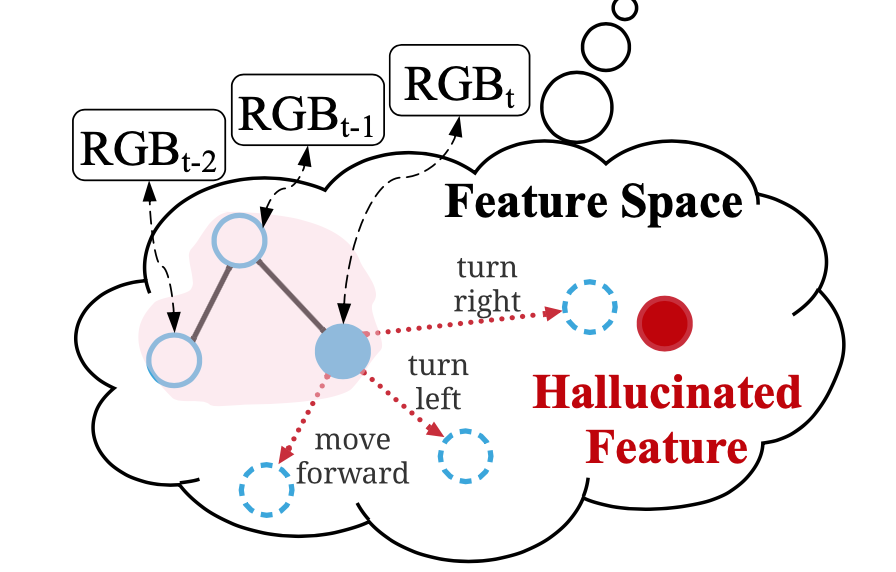

Metric-Free Exploration for Topological Mapping by Task and Motion Imitation in Feature Space

Yuhang He, Irving Fang, Yiming Li, Rushi Bhavesh Shah, Chen Feng.

Robotics: Science and Systems (RSS), 2023.

We propose metric-free DeepExplorer to efficiently construct topological map to represent an environment. DeepExplorers exhibits strong sim2sim and sim2real generalization capability.

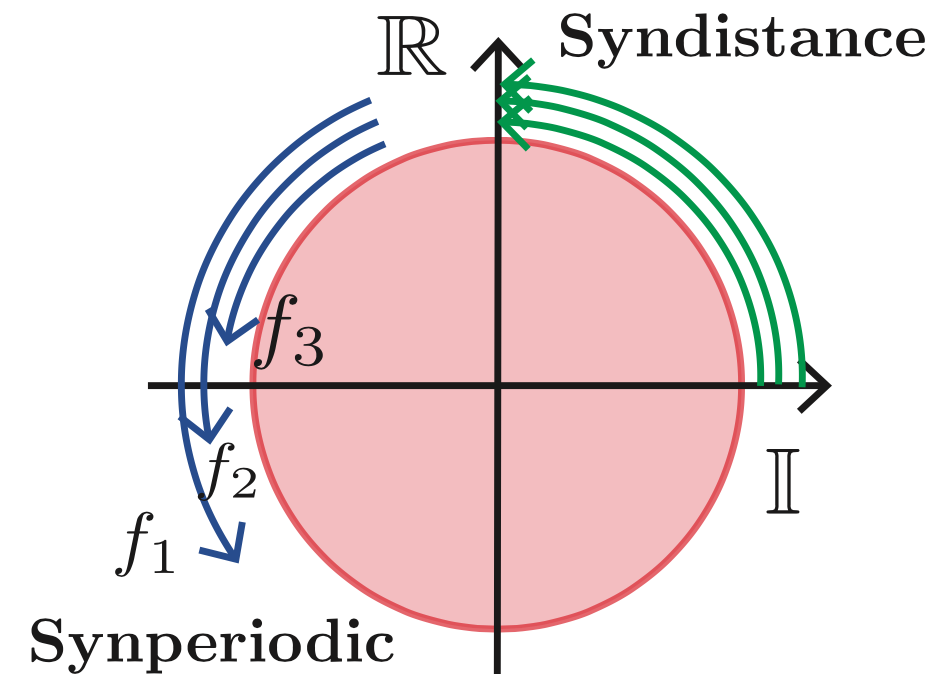

SoundSynp: Sound Source Detection from Raw Waveforms with Multi-Scale Synperiodic Filterbanks

Yuhang He, Andrew Markham

International Conference on Artificial Intelligence and Statistics (AISTATS), 2023.

We propose a novel framework to construct learnable sound signal processing filter banks that achieve multi-scale processing in both time and frequency domain.

SoundDoA: Learn Sound Source Direction of Arrival and Semantics from Sound Raw Waveforms

Yuhang He, Andrew Markham

Interspeech, 2022.

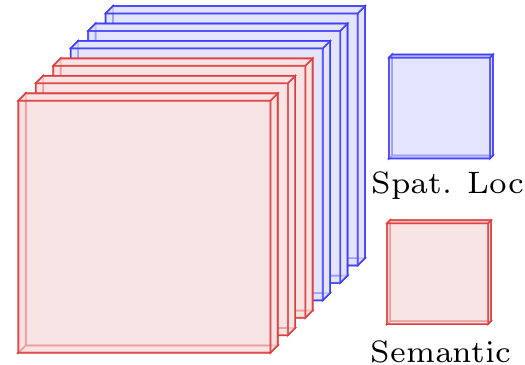

We propose a novel sound event direction of arrival (DoA) estimation framework with a novel filter bank to jointly learn sound event semantics and spatial location relevant representations.

SoundDet: Polyphonic Moving Sound Event Detection and Localization from Raw Waveform

Yuhang He, Niki Trigoni, Andrew Markham

International Conference on Machine Learning (ICML), 2021.

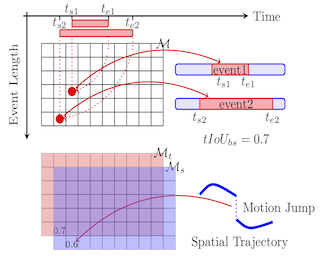

We propose a novel sound event detection framework for polyphonic and moving sound event detection. We also propose novel object-based evaluation metrics to evaluate performance more objectively.

# PUBLIC OFFICE HOURS

I am always happy to chat with people who are interested in my work. You can check the following office hour I keep update to book a time slot if you want to chat.